Introduction

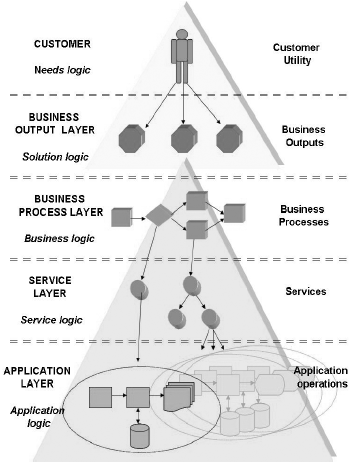

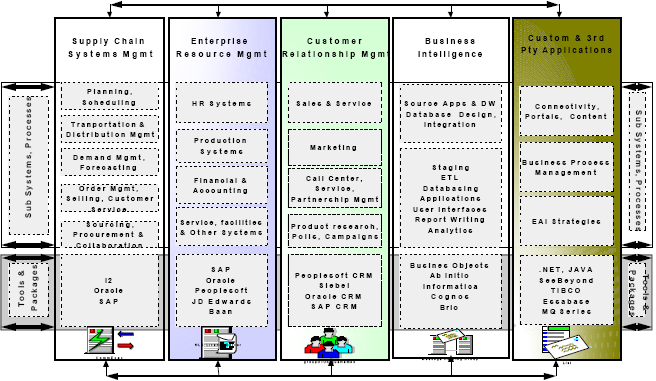

SOA – Service Oriented Architecture and ERP – Enterprise Resource Planning are two domains in IT that have changed how organizations interact with applications. SOA provides a means to unify massive business processes by creating structures of large applications into services or smaller modules. Once the application has been split into smaller integrated modules, then individual departments or people can access these modules and carry out assigned tasks. It is also possible to build new ad hoc applications from these modules to construct new applications as per the user requirements.

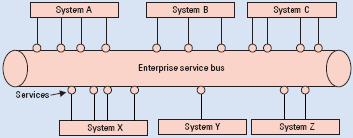

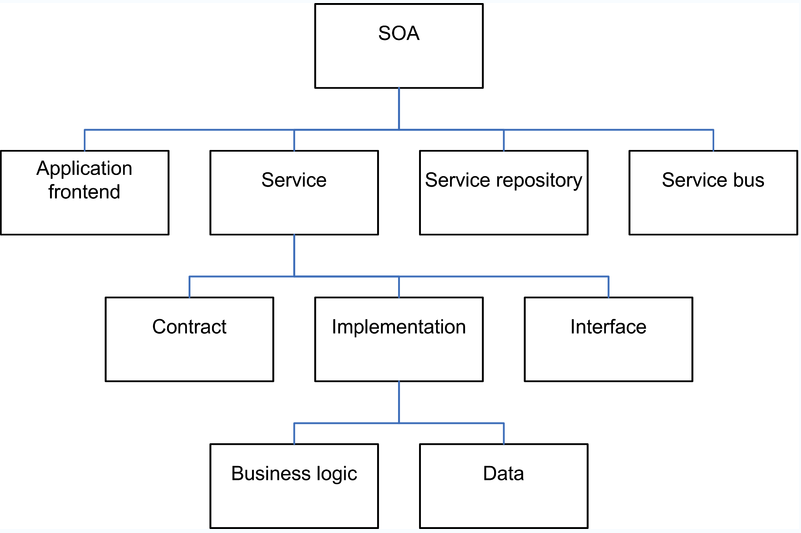

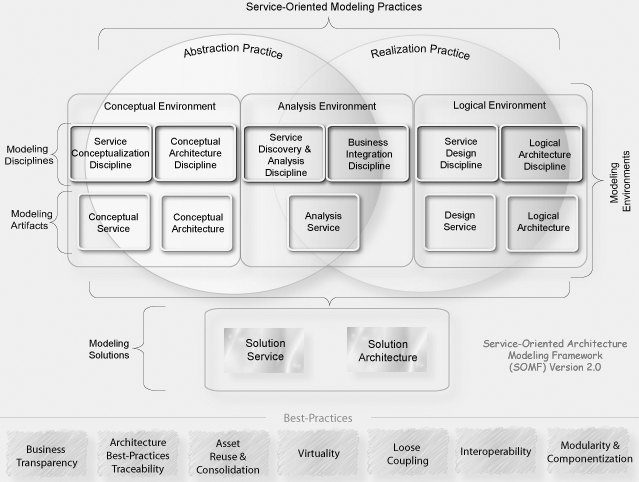

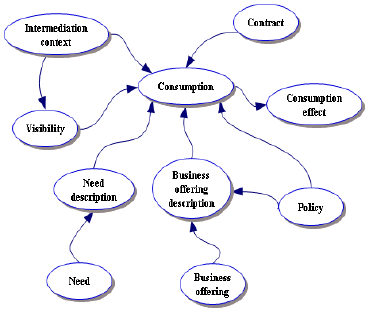

In effect, SOA provides an envelope or a framework to for a group of services that would be able to communicate with each other. SOA removes the earlier ‘silo structure’ of applications and converts them into integrated modules. SOA also promotes reuse of software components and this feature allows very rapid and lowers costs for software application development. It should be understood that SOA provides the basic framework for integration of diverse applications and may not be used in developing applications (Classon, 2004).

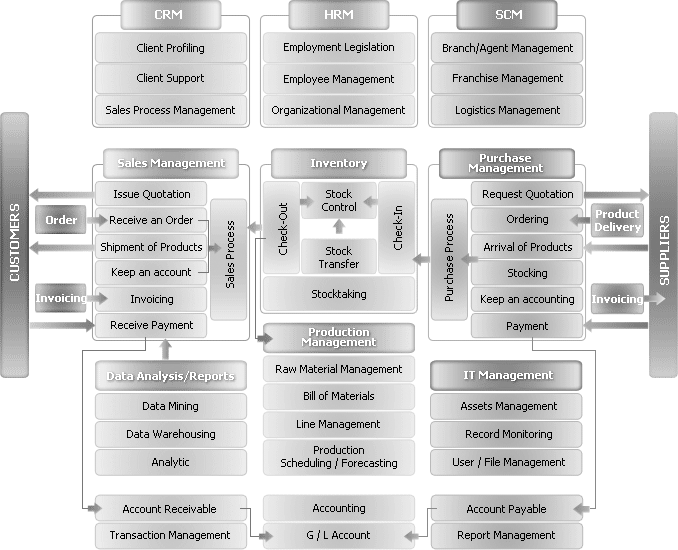

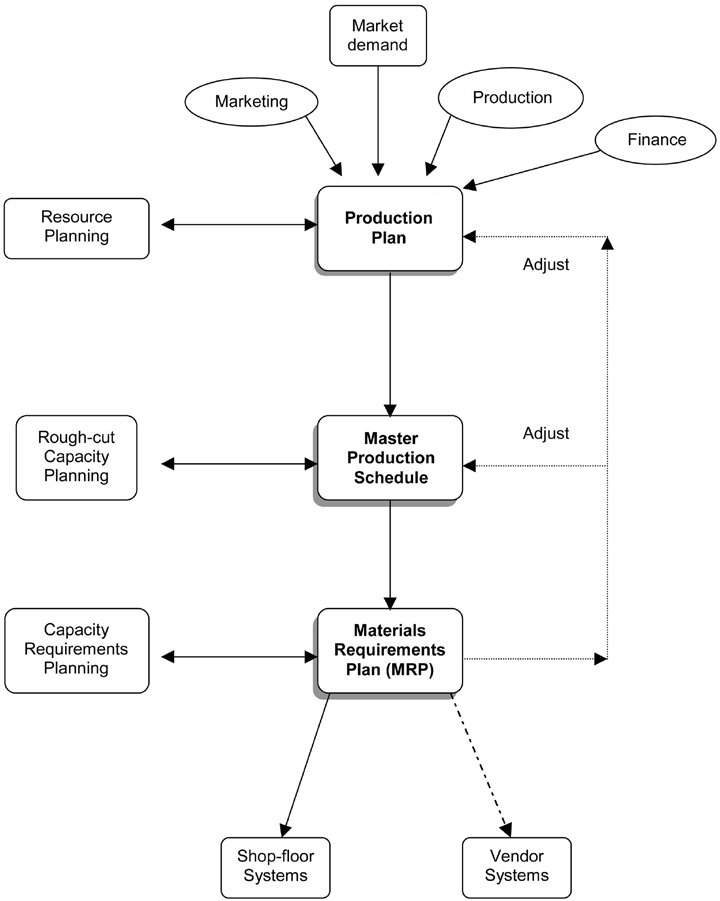

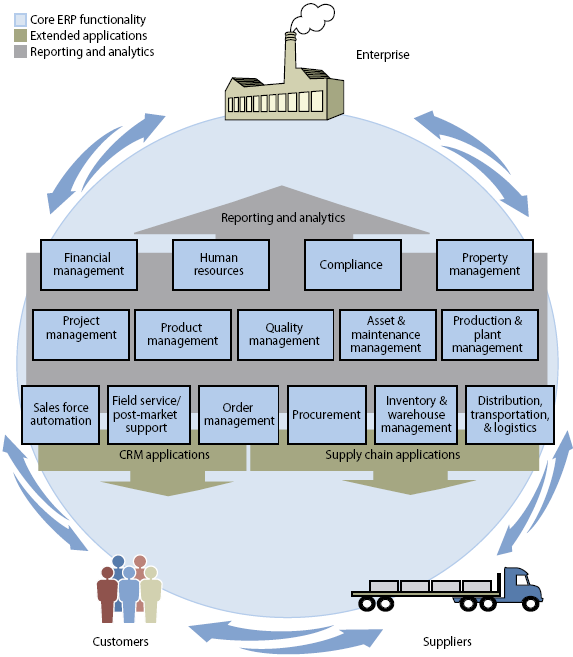

ERP applications are massive enterprise wide software applications that would integrate diverse legacy applications that a large organization has developed over the years. ERP solutions typically cover functions like stores and inventory management, procurement, manufacturing, supply chain management, logistics, marketing and sales and others. ERP applications are the workhorses of large organizations with global operations and they help to control the production, inventory and ensure that various processes and operations run to meet the organization goals.

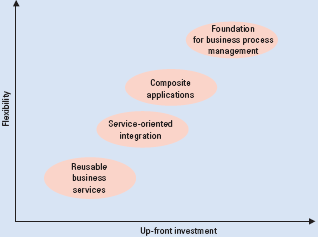

The utility of SOA and ERP in building applications was never an issue. ERP with decades of implementations in global applications has served its role very well, but the systems and applications are very expensive, complex and take months if not a couple of years to implement. SOA with its web services has become the architecture of choice for deploying smaller systems and applications for organizations. SOA is more economical to implement and low cost integrators from China and India can very well take up the development and implementation work (Brehm, 2006).

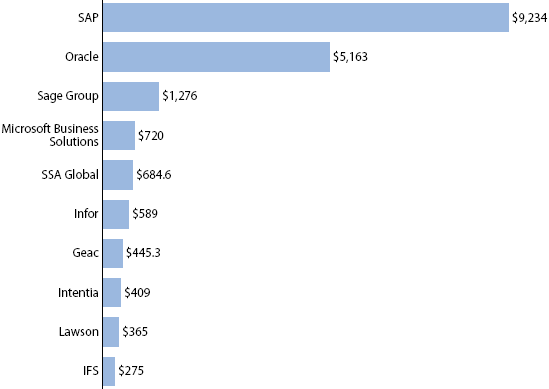

There are also many open source ERP products such as SugarCRM, Apache OFBiz, Compiere, Tiny ERP, Plexus that can be configured as per user requirements and implemented for lower complexities of applications. These have been integrated by web services of SOA for different organizations and in fact, CRM modules that do not handle huge volumes of critical data have been fully converted into SOA web services enabled. However, full fledged ERP suites such as SAP, Oracle, PeopleSoft and other are massive in size and if they are to be converted to web enabled SOA offerings with web services, then it is estimated that about 30000 individual web services will be required. This is simply not possible, as making all these web services talk to each other would be almost impossible.

However, with full-fledged ERP suites costing a few hundred million USD, major ERP vendors have realized that getting new customers is becoming more and more difficult and a majority of their sales comes from upgrades to existing customers. The market is not very eager to accept expensive ERP solutions for which organizations have to change their business processes and not the other way around. Oracle, SAP and PeopleSoft have taken up projects that involve using the SOA and web services for some of their common modules such as HR, Finance, CRM and even supply chain management (OpenERP, 2009).

This thesis discusses and researches ERP, SOA and how they can be integrated to allow strategic management for the global business environment.

Research Question

The research question is framed as “Strategic Management with Enterprise Resource Planning Systems and Service Oriented Architecture in the Context of the Global Business Environment”.

Aims and Objectives of the Study

The objective of this study is to contribute to the growing body of knowledge in this field by exploring both the technical and theoretical foundations underlying the process of the Strategic Planning of the ERP System and SOA. In the context of the Global Business Environment, we design a genre framework to understand the process in a holistic view, identify the critical factors of the project, and assess their effectiveness in the implementation of the project. Also I will test this model in the context of the global business environment and how it’s affecting it, by looking at the Critical Success Factors(CFS) and developing an ERP projects based on best practice perspective.

This study aims to contribute to the growing body of knowledge in this field by critical examination of the alignment of IT with strategic at the role that ERP systems play. In scope, the study will commence with exploring the technical and theoretical foundations underlying Strategic ERP systems as implemented globally, continue to a scrutiny of the inherent strengths and weaknesses pertinent to this category of IT systems and analyze near-term prospects. We shall develop a vision of the outlook for ERP via an in-depth understanding of growing demands of IT systems; how success or failure is linked with corporate culture and leadership styles; and identifying critical success factors.

The objectives of the study covers:

- To develop a holistic view of strategically managing the acquisition and deployment of an ERP system via an authoritative review of the literature, buttressed by an empirical field investigation using a combination of qualitative and quantitative methods.

- To explore the case study approach for a synthesis of the dominant success factors for an ERP implementation.

- To highlight the major challenges commonly encountered when acquiring, implementing and maintaining ERP systems.

- To develop a thorough understanding of SOA, its implementation strategy and where the technology stands as of today

- To critically investigate successes and drawbacks by firm size, implementation strategy, markets or countries, centralized versus decentralized organizational management.

- To explore how ERP and SOA can be integrated to build economical and feasible solutions for enterprises

- To examine the feasibility of using Software as a Service – SaaS model for SOA and ERP platforms

- To extract best practices and other recommendations for those planning for, or already making a career of, consulting for ERP implementation.

Purpose for taking the research project

The PhD research should underpin improved provision of the importance of the systems for the management to take in concentration when designing the strategies of the Enterprise Resource Planning issues for the organization. Enterprise Resource Planning (ERP) implementation is a technological breakthrough that is challenging and changing the operations of businesses and organizations around the world. ERP offer a software-based system that handles an enterprise’s total information system needs in an integrated fashion, ERP focus in on all aspects of operations within a company from Finance, Accounting, Human Resources, Customer Relations, and Inventory to the result which is the product output or service that the consumer receives. The successful strategic planning of the ERP incorporates the delivery of ERP and implementing the system into the Organizations.

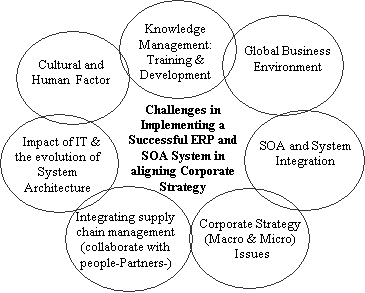

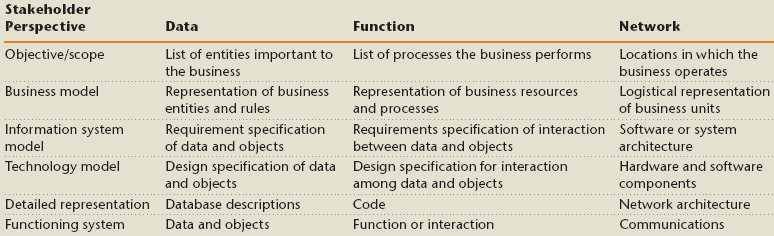

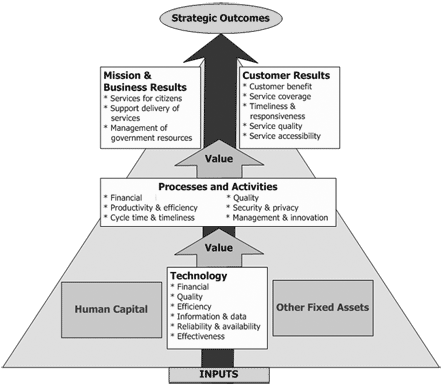

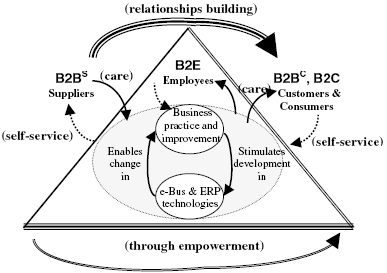

This research and study will focus on ERP system implementation from a strategic and technical perspective and in the context of the global business environment. Also it examines the success or failure factors of ERP projects. The proposed model for strategic managing with ERP System in the context of the global business environment as shown in the following figure, links together the challenges in implementing a successful ERP system in aligning corporate strategy in an organization, which will include six components charted in (Figure 1.1), the aspects demonstrate the characteristic being that they are integrated into completing the system. The first is the Cultural and human factors, 2-Knowledge management: training & development, 3- The impact of IT and the evolution of System Architecture, 4- Global business environment, 5-Integrating supply chain management (collaborate with people-Partners), and 6- Corporate Strategy (Macro & Micro) Issues.

This proposal will be tested in depth in the next stage of research to show the successful way of handling the process of implementing an ERP system and aligning it with the corporate strategy. It uses a case study methodology to compare a successful ERP implementation with an unsuccessful one. The study proposes working with functionality, maintained scope, project team, management support, consultants, internal readiness, training and planning, and will focus on the adequate testing, which will be critical to the success of ERP project implementation and also dealing with organizational strategies. Development, diversity and budgeting are other important factors that are contributing to a successful implementation.

Rationale for the Research

This section presents the rationale for the research and would show why the paper has relevance to today’s industry needs.

SaaS, SOA and ERP

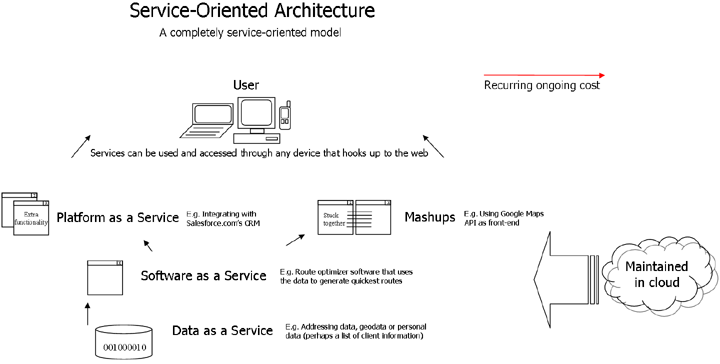

Software as a Service – SaaS has emerged as a strong player in the application services area. In this model, a software vendor gives licenses for an application to customers who use the applications as a service on demand. Software vendors either host the application on their internal servers or provide time bound or transaction based access to the customer.

Additionally, they may even provide download facility to the internal network server of the customer and disable it after the set license period expires. Advantages of this model are that the cost of licensing is very marginal when compared with the cost of acquisition. Customers who may not have sufficient development expertise can demand new applications to be delivered at reduced costs. This concept is an extension of the application service provider that existed a few years back. It is estimated that by 2015, SaaS would increase its business by 500%.

Many applications have been offered through SaaS and they include CRM, HRM, financial and accounts management, web content management, eMail services, IT security, IT service management and so on. Many leading firms such as Oracle have started offering SaaS. One small company called Salesforce.com that offers SaaS and ERP solutions on SOA has reported a turnover of 1 billion USD for 2008 year end. There is thus a growing interest in SaaS as a means to reduce software procurement, development and maintenance costs.

An important success factor for SaaS is that it would work only when economies of scale are in place and when a majority of customers are ready to buy off the shelf and commodities products. If each customer would require some element of customization, then the concept would be in trouble since the service provider would not have the means to provide customization for everyone. The concept would also not work if highly customized and niche products are required (Bennett, 2000).

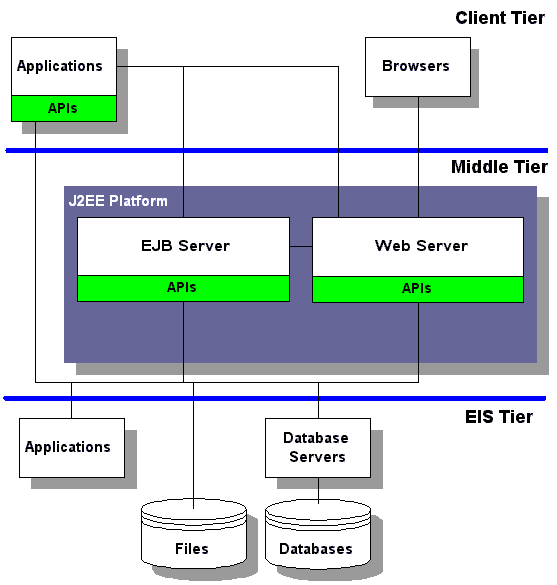

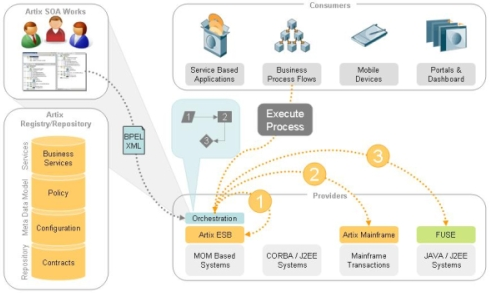

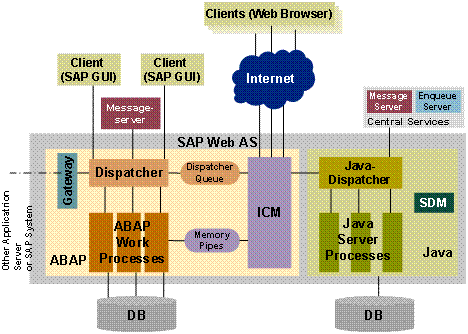

SOA forms an important driver for SaaS and creating each software service as a service provider, it is possible to make the different services speak to each other. The functionality of the applications can be offered to customers using public brokers and adding functionality and data. SAP has introduced the ‘SAP Business By Design’ product that is a fully integrated ERP offering for SMBs. The product is a good instance of combining SOA, ERP on the SaaS model. The application would reside in the company hosted servers and access is provided to customers PCs and hand held devices. In this model additional concepts such as Cloud computing and infrastructure as a service – IaaS and also platform as a service – PaaS have been used.

However, the business model has certain risks like if the service provider goes out of business overnight, then the customers would suddenly be cut off from the organizations database. Reliable service providers such as Oracle and SAP have better prospects than unknown and small companies that may vanish overnight (Fu, 2008).

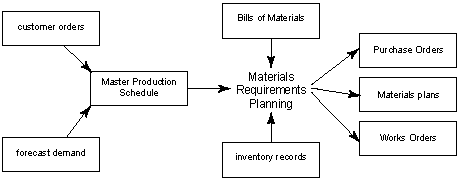

Problems with ERP

Experts in the ERP implementation in various industries have often vilified ERP solutions as a ‘massive government organization that very few understand and very few can control’. While such reports are exaggerated, they reveal the frustration that these application creates during development. Part of the problem is that many of the modules for HR, finance, manufacturing, inventory, supply chain management, sales, logistics and others require a certain set of business rules. These business rules are to be clearly defined and well adhered to by the organization and this rarely happens. Organizations have to operate in the open market where the ground realities are different and they may keep changing now and then. When a sales forecast is made, it factors in indicators such as the GDP, cost of living index, purchase parity, inflation, historic market demand and others and uses complex algorithms to make the forecasts. The whole cycle of procurement from vendors, inventory management, billing, recruitment, manufacturing and marketing would be based on these forecasts. If the forecast has some errors, then there will be a bull whip effect in both the upstream and downstream flows and the result is either a bloated inventory of raw materials and unsold goods or a stock out situation. Both these effects can ruin businesses.

However, ERP solutions can factor in some elements of corrections manually and massive losses are usually averted. In addition, the fact remains that in many organizations with some legacy applications, integrating the databases of these applications with the central ERP solution becomes very difficult. Ensuring that the applications can speak with each other becomes a bigger problem as data integrity has to be retained, data tables have to be updated and there are many other problems that ERP implementers face. In many cases, the legacy applications are not very well defined and structured and usually the local expert would carry out regular tweaking and trouble shooting to ensure that the legacy applications are running. These problems cannot be handled by the ERP application and hence the bad name (Rettig, 2007).

How SOA could address the ERP problems

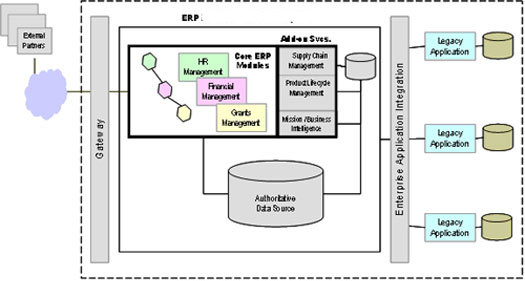

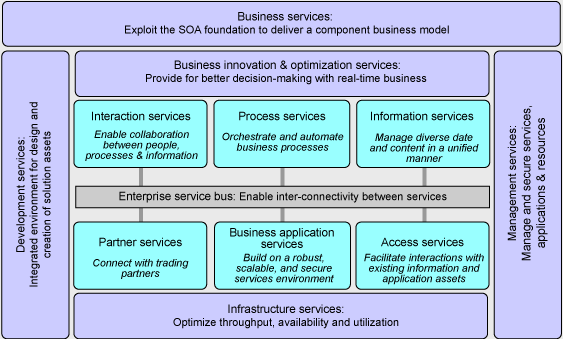

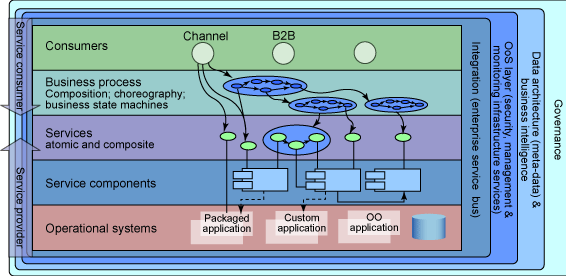

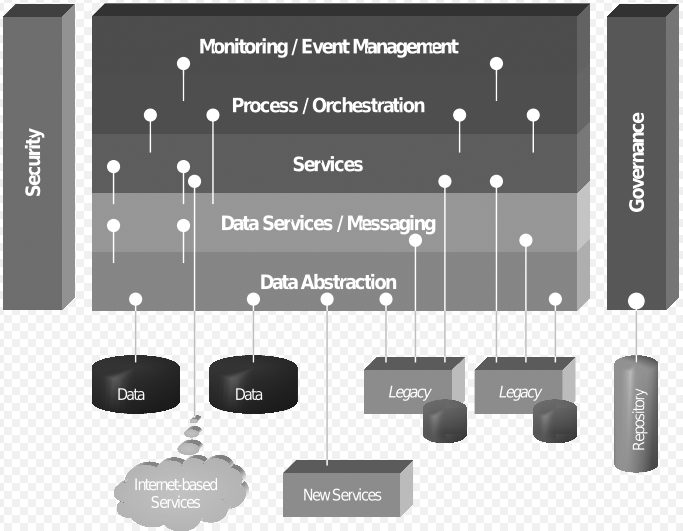

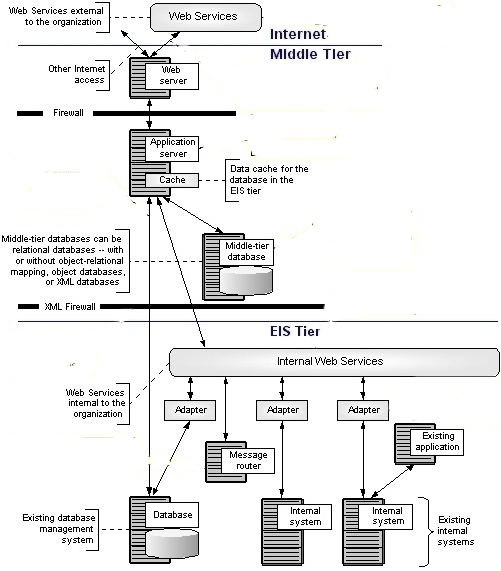

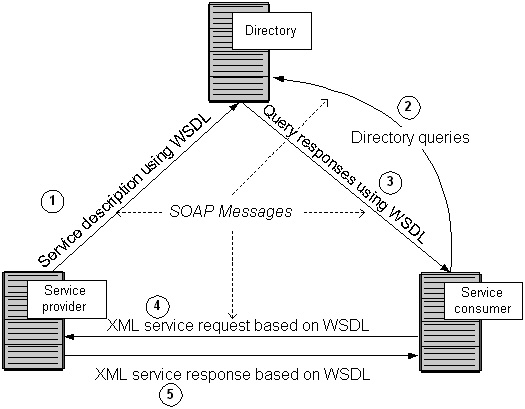

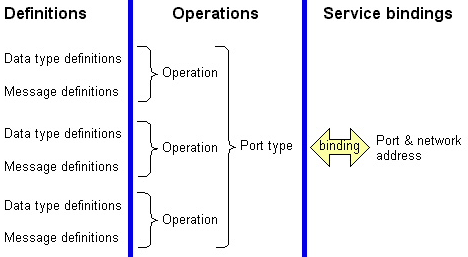

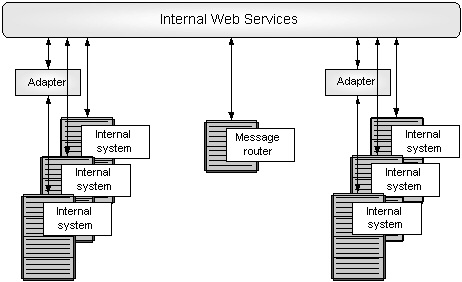

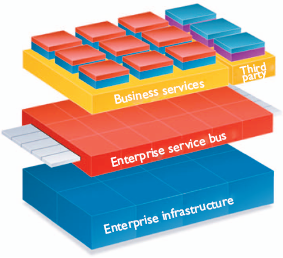

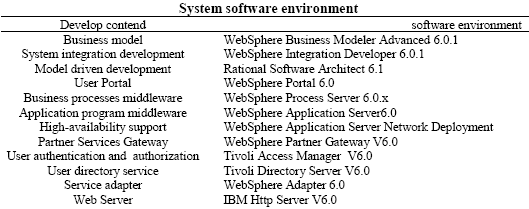

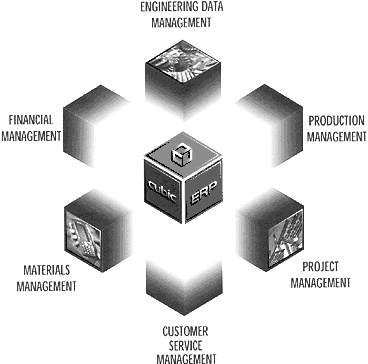

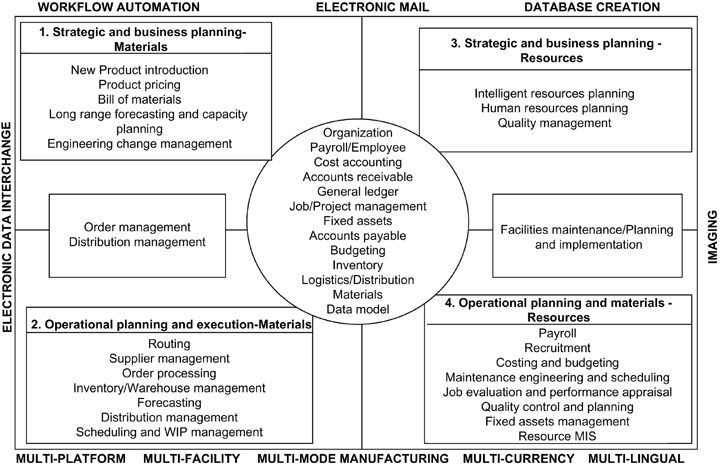

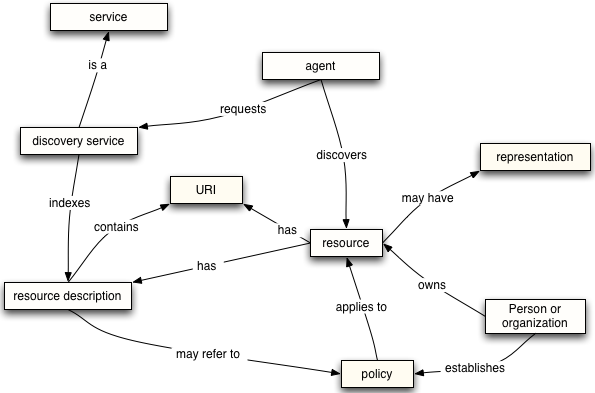

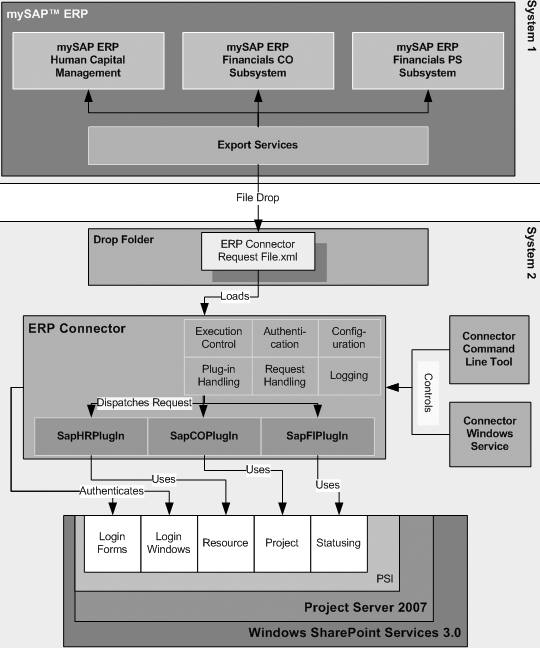

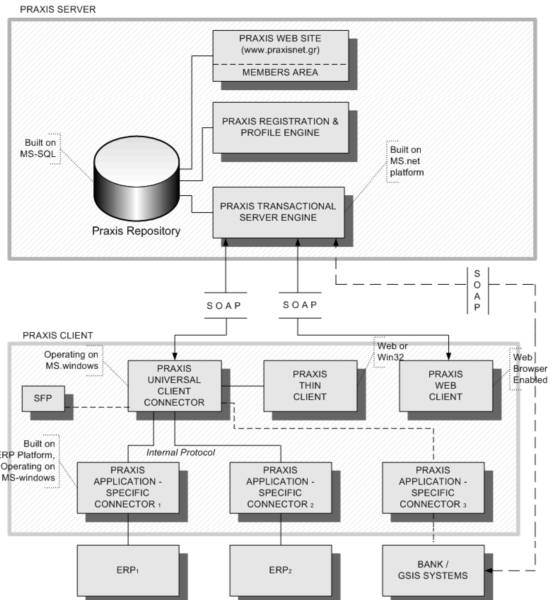

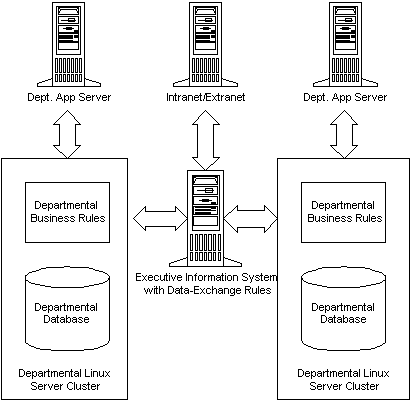

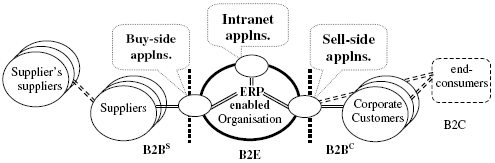

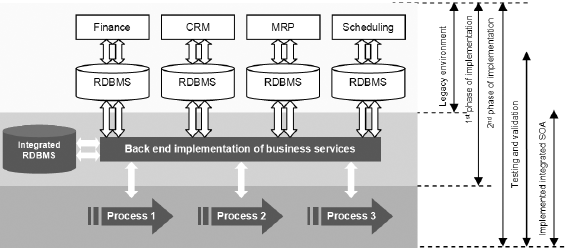

The fact remains that large ERP solutions that are very complex have a large amount of embedded logic that has come after years of fine-tuning the code and can be regarded as the best practices. Commonly used modules such as finance and HR that are widely used have extensive embedded logic. SOA helps to extract and leverage the varied IT capability that is embedded in large applications such as ERP and in various modules that form business applications. It is possible to extract and integrate the best features of important modules of ERP using SOA and to build applications that would suit user requirements. Please refer to the following figure that illustrates a typical ERP layout (Specht, 2005).

As seen in the above diagram, some core ERP modules such as HR management, financial management, supply chain management as shown and these are connected to an authoritative data source. These core modules of the ERP application are regarded as the core business enabling services by the prospective SOA framework. Typically, the ERP applications would regard modules such as supply chain management and product life management as plug in applications that can be added on to the core modules as and when required. These add on modules may be very important to organization objectives and may also be in the area of key success indicators. These add on modules are often very crucial for a department and they work to meet the organization needs.

However, these modules again require extensive configuration, customization and may be driven by specific enterprise business rules. The core modules such as financial management would have to follow the accounting and regulatory rules of a country. There is not much difference in the manner that accounts are entered, journal and voucher entries created in different companies and any financial statements that are made have to conform to certain regulatory bodies. It is always possible to use these core modules among different enterprises to suit individual needs with a little bit of configuration.

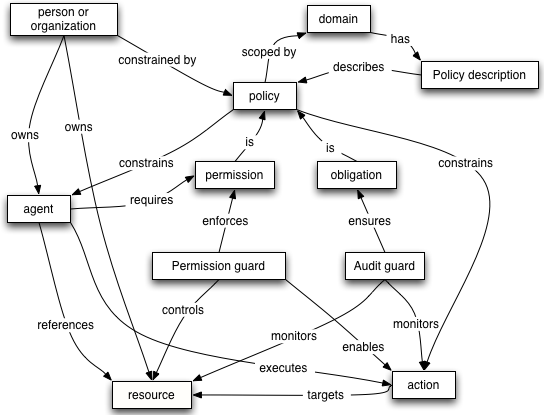

The add on modules on the other hand displays the real skill of an ERP vendor who has spent millions of man years in developing these solutions and they reflect the best practices of an industry. These core modules are again driven by specific business rules that are unique to a company and it would not be possible to plug and use them in other enterprises. Any ERP vendor and implementer who want to implement the core modules and add on modules integrate these modules and uses them as business services. If SOA has to be adopted as a framework for transforming the organization, then when an ERP implementation has to be done, all the IT systems of the business, applications, legacy applications and infrastructure would have to be adapted to the SOA framework. Thus, the ERP modules would be central services for the SOA framework and help to meet the SOA objectives (Maurizio, 2007).

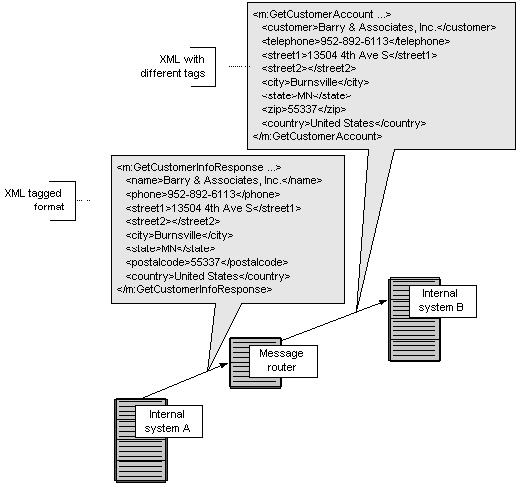

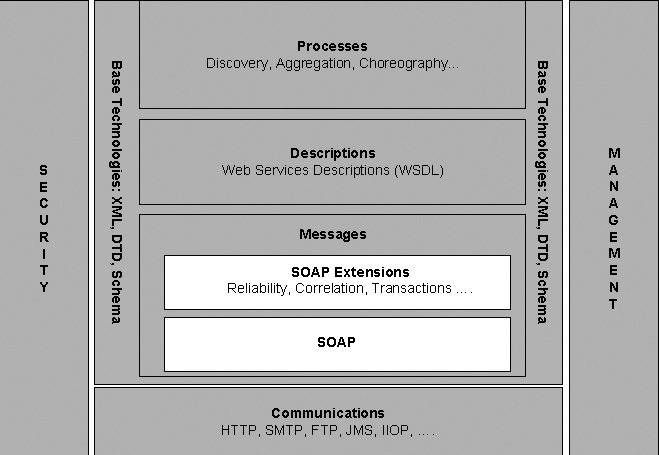

SOA depend on modularity concepts and service layers that be arranged in nested dependencies of robust services so that they can be used to run business functional requirements. SOA differs from traditional architecture in that it attempts to separate data from the business logic by giving separate program layers that different applications can share. As a result of this when the business logic is similar, it need not have to be written into each application that has to use the logic but it can be called from a central business logic and business rules repository.

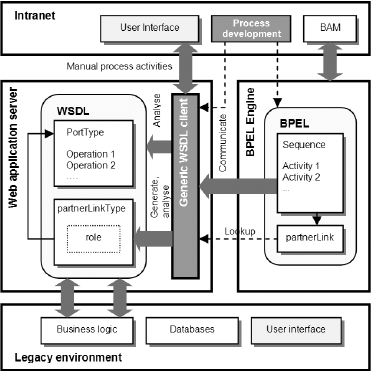

This also implies that when business logic has to be changed then it needs to be modified in one place only and far lesser efforts are required to implement the changes. Therefore, multiple business applications can use the changed business logic. Interestingly, ERP solutions are in many cases provided with some web service tools and obey the web standards that are implemented across a wide number of applications so that disparate legacy applications can be integrated on different technological platforms (Muscatello, 2008).

While web services from ERP applications are not very robust in terms of communicating with external applications and rather follow a dedicated set of instructions, these services can be used through SOA to allow the integration of the legacy applications and the business services of ERP applications. While it is expected that developing and using web services for ERP integration would take some time given the extent of coding and framework to be developed, a start has to be made somewhere. Once the initial developments are made and the learning’s captured, further implementations would become much more straightforward (Rego, 2007).

Largely SOA can be utilized to hide the complexity of legacy applications of an organization. When portal access is allowed through the legacy applications, customers can use consistent and common views to the data and business logic though the information would be accessed by different users through multiple applications that may be operating in different production environment. Since ERP solutions are prepackaged with functionalities, they can be used along with embedded development tools within the SOA framework to create the required web services support for integration of legacy applications. If SOA is properly implemented, then it can lead to time and resources for legacy integration. Custom built applications can be installed along with business enabling services and these can be activated as important ERP solution components. A beginning can be done with the core ERP applications such as HRM and financial management and then further add on applications can be integrated (Carey, 2008).

Competition between SOA and ERP

There have been widespread speculations that SOA would spell the doom for ERP and that with the introduction of SOA, ERP will become obsolete. Nothing can be farther from the truth and such speculations are founded on ill-conceived notions and understandings. SOA provides a framework designed to link some applications that can use web services. By itself, it cannot do anything and it still needs applications that have to be integrated. The fact now remains is that big time ERP vendors such as SAP and Oracle build complex solutions primarily for the enterprise level of large corporate. A move of SOA and ERP would allow small time ERP vendors to also provide solutions to small and medium businesses. However, these small vendors would still be building solutions that can be run and integrated using web services. This development has placed the interests of big time ERP vendors at risk since they would lose out on the opportunity of SOA, if they do not accept SOA. The very fact the large ERP are difficult to implement and make integration a big problem encourages the use of SOA. Therefore SOA and ERP bus would anyway leave on a trip and if the big time ERP vendors do not join, then they would be left out (Hansen, 2006).

There are some small ERP vendors such as Open ERP that offer complete ready to implement solutions, ranging from 1500 Euros for SMB applications to 6400 Euros for Corporate applications. Such companies have developed robust solutions that can be deployed with much lesser effort through SOA and at far lesser costs. Since one can always buy modules as required, further upgrade becomes much more simpler (OpenERP, 2009).

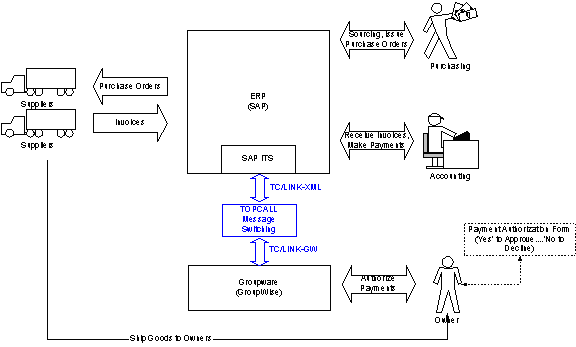

How ERP and SOA can work together

The main problem with ERP is a lack of agility and rich functions that allow quick scenarios and business cases to be run. What the market currently requires is a sharp and reduced reaction time that would allow quick deployment of applications (Kashef, 2001). By using SOA, it is possible to create an execution platform that would function along with ERP and this would reduce the complexity. The execution platform can be used to handle any process change by changing the business rules or the configuration manager and not resorting to complicated code changes in the ERP applications that would take a lot of time followed by testing to see how it has impacted other functionalities. Such a move would allow very quick completion of objectives since each software application would present its capabilities as a service and the services can be changed as required (Classon, 2004).

As an example, one could consider that a certain product has its price revised as per different slabs based on number of units bought by the customer. If a sales and inventory ERP system is running, it would be very difficult to change the price. Some actions would be required such as seeing the areas where the product is sold, name and address of the distributor so that they can be informed, informing the warehouses and authorizing the changes, holding up inventories of items in the pipelines, attempting to recall the products, changing the stock keeping unit in all locations, informing partners and so on. The task would become much more difficult if information had to be entered manually and all the databases refreshed and this would probably take a few hours.

However, by using SOA, the business rules can be changed at one location in the execution platform and the new rates fired through the system at all locations. This process would take a few seconds at the most and save on costs and time. SOA also allows web services to be created and reused for some reasons such as product recall, placing a product on hold, upgrading products and so on. Web services can be created for each of these reasons and applied as and when required. It should be noted that ERP has not been done away with but that it forms an important aspect of the business function and that SOA is only being used to interact with the ERP application (Hitt, 2002).

Methodology Used

The paper has used a combination of qualitative and quantitative methods to research the paper. Research has been performed using a literature review of peer-reviewed journals, books by experts in ERP and SOA, review of reputed newspapers and databases of service providers such as SAP, Oracle, PeopleSoft and others. A list of key words was prepared for the topic and a search was conducted using online libraries such as Questia, Thomas Gale and ProQuest. Content was obtained from these archives, some printed publications, and the content was sorted to find their appropriateness for the research questions. A list of reserves was then created and the required content was read and interpreted and the analysis along with the implications was written down in this paper. The paper has also created a questionnaire that was presented to some professionals in SOA and ERP and their replies were analyzed to form an experts opinion on the subject.

Technology Acceptance and Diffusion

History has seen some inventions that would appear as very technically sound and useful but which was not adopted by the mass market initially. Some of the examples are the escalator, the steam boat and many other items. People and this included the nobility, the scientists of the ancient world and many others, were simply not ready to accept new and advanced technology that would have helped them immensely. Therefore, technology thus took some time to diffuse into the public domain and there would be a period of delay, during which technology would find wider acceptance and use. The time required for diffusion of technology has indeed reduced, but there are certainly some psychological barriers that prevent a new technology from being adopted. This chapter examines some well known theories of technology adoption, that are relevant to the thesis.

The topic of technology adoption and the delay required for mass adoption is important since the ERP and SOA integration needs to have a sufficient mass based and wider adoption. Innovation and development would be assured only when application developers can see that there is sufficient potential and market.

Technology Diffusion Theories

This section presents a few well-known theories on technology diffusion and acceptance.

Rogers Diffusion of Technology

Rogers (1962) in his book ‘Diffusion of Innovations” speaks of how technology is diffused in a society. He speaks of diffusion as the method used by an innovation to be communicated by using certain channels among different groups in a social system. This method is very important when it comes to forming models of consumption and to a certain extent, it has given trend to the ‘keeping with the Jones’ syndrome and has a direct bearing on the thesis objectives. The author has attempted to explain the mechanics of the why and how and the rate that innovations, new products and technologies are spread through different social groups and cultures.

The findings hold for technologies, consumer goods, food items and so on. The author undertook the study in 1950 to find the effectiveness of advertisement used in broadcasts. In those times, many products could be called as innovations and very few people had used them. The study brought up some facts and these are still used in marketing. The author noted that contrary to expectations; print media or TV advertisements were not the main reason why people bought products.

In a society, there are different types of adopters of the technology or product and these are: early adopters or innovators, secondary adopters, tertiary adopters, quaternary adopters and then the laggards. Roger asserted it would be innovators that would first try out a product. This group was often well educated, came from well to do families and had a high net worth. The group also commands respect in their society and it is this group that first buys a product or adopts a new technology. Next in line were the secondary adopters who would wait and watch to see what the innovators had to say about a product and this group would buy a product only if the innovators endorsed it.

The tertiary adopters would further wait and watch to see how the innovators and the secondary adopters felt about a product ascertain that the quality and price was right and if everything was acceptable, they would in turn adopt the product. The quaternary adopters were the next in line and would require further reassurance that the product was indeed suited for their needs and then make their decision. The laggards were the last in line and they were frequently the oldest among the group and the last ones to buy or adopt a technology.

Roger pointed that in a closed society, it would be much more effective if manufacturers first targeted the innovator group and encouraged them to buy a product or adopt a new technology and then endorse the product. Sports goods manufacturers, fashion products and others, are still utilizing this method of advertisement widely and celebrities such as sport stars or film stars are seen to be using a product so that sales can pick up. However, overuse of this method or multiple endorsements by a star would lead to overexposure and loss of efficiency of the medium.

Technology adoption lifecycle

Brown (2003) has provided a good review of the technology adoption lifecycle, based on the Diffusion of Innovation model as described by Rogers. The author has based her article on the lower than expected sales of PCs in the US domestic market in 2003. Leading manufacturers such as Gateway, Dell, Apple, AMD and others had assumed that the sales would increase once the cost of PCs was lower than 1000 USD. These predictions had been made by some leading marketing agencies who were assuming the people who were interested in technology would buy more products once the technology became affordable.

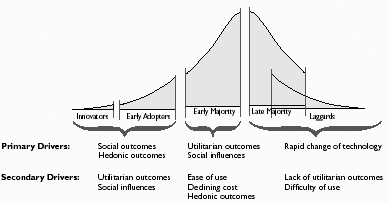

The author reports that the market researchers did not consider if price was the only barrier for those interested in buying a PC or if there are any other additional barriers. The author calls this the ‘non-adoption phenomenon’ and this is very important when the question of mass consumption of a technology or removing the digital divide has to be considered. The model proposed by Rogers was slightly modified and an illustration is as given below that shows the technology adoption life cycle:

There are five categories of technology adopters and they are innovators, early adopters, early majority, late majority and the laggards. According to Brown for any innovation, 16 percent of the adopters are made of innovators and early adopters while 34 percent is made of the early majority, 34 percent is made by the late majority and the remaining 16 percent by the laggards. Non-adopters are not included in this model since it is assumed that everyone would ultimately adopt the technology. As shown in the illustration, there are some primary and secondary drivers for each category.

Challenges for technology adoption

Venkatesh (et all, 2001) speak of the challenges for technology adoption and this area is very relevant to the thesis objectives. According to the research conducted by the authors in American households, PC adoption is influenced by factors such as hedonic and utilitarian outcomes and by social outcomes such as status in the society. The authors also reported that issues related to the quick changes in technology and the fear that the products bought may become obsolete influenced non-adopters. Technology implementers have to consider the influence of social and hedonic influences and the cost factor are important to consider.

Studies show that cost was related to the technology and while the basic configuration of computers such as RAM, processors clock speed and hard disk space is important, there is a marked deviation when it comes to buying higher end computers that may have added features. Fear of the product along with the cost acts as a main barrier for non-adopters as they tend to be influenced the frequent advertisements of new features that are introduced. While the study was related to the PC market, the arguments would hold for any new constantly evolving technology and market.

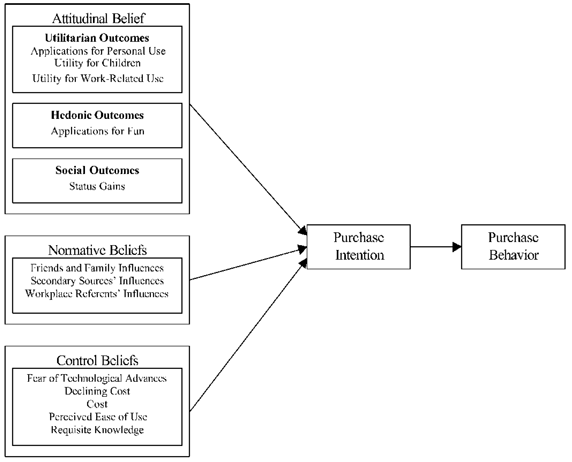

As seen in the above illustration, the purchase intention and purchase behavior is reinforced by some factors and they are: attitudinal belief, hedonic outcomes, social outcomes, normative beliefs and control beliefs. All these lead to the technology adoption behavior and this is equated with the purchase behavior as adoption and purchase are regarded as commitment. Attitudinal belief is made up of factors such as utilitarian outcomes that include applications for personal use, utilities for children and work related used. These are the core beliefs that would make a person take up technology adoption and they form the topmost tier of the factors.

Next come the hedonic outcomes and certain technologies are adopted only for the hedonic pleasure they give. Social outcomes have factors such as the status gains and this factor would mean that a technology is adopted just because it would give the adopter some kind of a social status. In some people, this outcome is dominant than the other factors and adopters would tend to feel that adopting a technology would give them a lot of prestige. People who would adopt a technology because of this factor would also be called as innovators as per Rogers model of technology diffusion. Normative beliefs are formed from influences by friends, family, from secondary sources and by interactions from the workplace. People in this group would tend to fall in the secondary and tertiary groups of Rogers model.

The author suggests that this approach is very prevalent in close social groups, in organizations and where people belong to a network of friends. Typically, people from this group would wait till someone they know has tried out a product and the decision to adopt the technology or abandon it would depend on the feedback they receive. In today’s connected world with communities such as Orkut and Facebook, people from across different geographies would ask for feedback about a particular product of technology and also offer advice and their opinions if they had any experience with a particular technology or product. Workplace influences also play a very strong role as people would tend to trust the people they work with everyday. Control beliefs are the barriers for non-adoption of a technology. Some of the factors include feat of technological advances that would threaten to overwhelm a person who would not be able to cope with the changes.

Another factor is the cost and it has been observed that as time passes, technology becomes more cheaper and this is the case with some products such as mobile phones, computers, lap tops and so on. In such as case, people would prefer to wait to hope that the price would keep reducing further. However, this influence and belief do not last for a longer time and ultimately the person would adopt the technology at its available state (Venkatesh, et all, 2001).

Theory of Reasoned Action – TAR

Originally propounded by Fishbein (1975), the theory of reasoned action – TAR is based on the social psychology concepts and argues “peoples voluntary behavior can be predicted by their attitude for that behavior and how they think that other people would view them if they carried out that behavior”. Behavior is formed by people’s attitude when it is combined to factors of subjective norms. There are three types of constructs for TRA and they are: behavioral intention – BI; attitude – A and subjective norm SN and the relation between these entities is BI = SN + A.

If a person inclines a particular type of behavior then he would display it. People’s intentions and will are influenced and directed by the subjective norms and their attitude towards behavior. The factor of Bavaria intention is used to measure people’s relative strength of intention to carry out certain behavior. The factor of attitude is made up of certain viewpoints that a person has about the results when behavior is performed and this would again be a result of how they value the results. The issue of subjective norms is regarded as a mix of the foreseen expectations that would result from individuals and groups and the intentions that they would show to comply with the prospects.

People’s observation that people who are vital to then feel that they must or should not carry out the a certain behavior. The author points out that even though norms and attitudes are do not have equal weight in prediction behavior, these factors would have different effects based on the situation and the individual. Following figures illustrate the main points of the theory:

Attitudes represent the total sum of the views and beliefs that a person would have for a specific behavior that would again depend on the analysis of such beliefs. As an example, a smoker may have a different attitude towards the health risks posed by smoking and he may even feel that smoking is bad for the health. Nevertheless, his behavior would be contrary to his beliefs when he continues smoking.

Another example is that of exercise. A person may have a very positive attitude towards fitness, exercise and may regard athletes with high regards and he may even take up an exercise routine. However, his beliefs could be that exercise is very tiresome and uses a lot of time and energy and gradually his behavior forces him to abandon his exercise routine. Subjective norms view the influence that other people in a social group exert over a persons behavioral intentions or beliefs. There would be a balance struck between what people in the social environment feel and ones own beliefs and the outcome would depend on the more dominant of the factors. As an example, a person may belong to a group of friends who exercise regularly and the social influence would force a person to take up exercise.

However, eventually the person’s beliefs would suggest that exercise is a waste of time and this creates a type of self-hypnosis that would eventually force the person to view exercise as a wasteful pastime or for the wealthy that have a lot of time to spare. The person may eventually abandon the exercise plan and may even forego the company of his friends as their influence and beliefs are contrary to ones own beliefs. This is an example of a negative subjective norm. On the other hand, it would be very well possible that the social influence may exert a positive subjective positive norm. As an example, drug addicts or alcohol addicts may undergo a rehabilitation therapy session where some addicts would gather with the single objective of abandoning their addiction.

A person undergoing this kind of a therapy would be influenced by the collective social norm that attempts to force a person from alcohol or drugs but his personal beliefs, in this case addiction may want to prolong the habit. When the collective social influence is dominant, it may force the person to abandon his addiction and this happens in many cases. On the other hand, if the personal belief or addiction is so severe that it is immune to the influence of social subjective norms, then the addiction will prolong.

Ajzen (1980) has made some interesting comments about TAR model. In practice, there are three conditions under that act to limit the utility of subjective norms and attitudes for foreseeing intentions and further, the prediction of performance behavior by the use of intentions. The three conditions are goals v/s behavior, availability of choices and the intention against estimates. The first condition of goals against behaviors gives the difference between the goal and behavioral intention.

As an example, a person may decide to lose weight and may have set a goal of losing 10 kilograms in 6 months and this is the specific goal intention and then the behavioral intention may come in and he may decide to use some type of diet medication that would make him feel less hungry so that he eats less food and thus achieve his goal of reducing the weight by 10 kilograms. The second is the availability of choices and taking the previous example, the person has a few choices available such as exercising to reduce weight, going on a crash diet, excising and adopting a diet and another choice that of taking only medication pills.

Among all these choices, the first, second and third choice would be most logical and have a higher degree of giving result but they are also the most difficult while the last choice of taking only medicines has the least possibility of producing sustained results. The author implies that in many instances, subjective norms and attitudes strongly influence people’s behavior and change their attitude and people may take the path of least resistance and efforts to achieve their goals. One good example is the goal to become rich and earn a million dollars. Many people would work hard their whole lives in very legal and socially acceptable jobs and may never achieve their goal but some people might resort to illegal means such as theft to earn the money. In such cases, social norms may dominate and change the attitude of people and decide how they behave. The third condition is the intention against the estimates and this occurs when a person has one set of intentions but may choose to act differently.

Bass Diffusion Model

Bass (1969) introduced the Bass Diffusion model that details the mathematical process to show how new technology and products are adopted when users of the product interact with novices or potential users. The theory has been built on the Rogers Diffusion of Innovation Model. The Bass model is used by researchers and marketing organizations when they would want to introduce a new type of product in the market. While Rogers model relied on fieldwork and practical observations of the agricultural industry of US in the 1950’s, the Bass model is based on the Riccati equation.

The model has three coefficient variables: m is the total number of people in a market that would ultimately use the product or the market potential; p is the possibility of people who are not using the product would begin to use it due to the external influence of early adopters and the publicity from the media and this the called thee coefficient of innovation and q that is the possibility of people who are not using the product would start using it because of internal influence or word of mouth publicity from early adopters or people that are in close contact and this is called the coefficient of imitation.

The formula used to predict the Nt the number of adopters in a given time is:

Nt = Nt-1+p(m-Nt-1)+q(Nt-1/m)(m-Nt-1)

Where Nt is the number of adopters in a specified time frame t.

As per the empirical research of Mahajan (1995), the value of p is between 0.02 and 0.01 and q has a value of 0.38. These values can be used in the equation along with probabilistic values for other coefficients to find the number of adopters in a society. When applied with good fieldwork, the model is found to produce very accurate results and allows forecasting to a high level of accuracy. Product manufacturers and technology innovators have often used this model to forecast sales and revenue generation among the target group. Certain modifications have been done over the course of time to increase the accuracy level of the model and to suit different market and product levels.

The model is found to work with predictive and stable goods and technology and it is difficult to use with fad products such as beauty products or fashion accessories, toys and other goods that may have a short shelf life of a few weeks if not days. The model has been used extensively in forecasting marketing potential and sales of new innovative products such as mobile phones, high definition TVs and many other products.

There are some software applications are available that allows calculation of the forecasts for adopters of a technology and databases are also available that gives the values of p, q and m for different product, based on extensive market research (Lilien, 1999).

The market needs to be imagined to be made of two groups of people and one group is made of the adopters or the innovators and the other groups is made of the imitators or the potential adopters. In an open system that allows the two groups to interact, there would be an exchange of ideas and concepts between the two groups. While the innovators or adopters would be quite comfortable with the new technology, the other group would be collectively made of late majority and the laggards. In an open system where there is no restriction on the flow of information between the two groups, there is a constant flow of information and news and the intensity of the flow measures the rate of infection of the ideas. If the second group of potential adopters are waiting to see the beneficial results, then the rate of infection would be very fast and adoption of technology may become a fad, s social status symbol and people may adopt the technology for the hedonic feelings.

However, if the people in the potential group are made of diehard cynics who are bent on stopping the adoption of technology for political reasons, then this would indeed create a problem and the group may very well succeed in thwarting the new technology from being adopted. if such forces are not evident in the social system, the rate of infection would increase as a function of utility of the product, the target age of the adopters and the cost. If all these are acceptable, then the rate of adoption would be very fast.

Following figure shows a graphical representation of the mechanics of flow between the two groups of innovators and the imitators. The innovators would be fewer in number initially and with passage of time, the number would start reducing, not because the product has lost appeal but because the imitators are fast catching up. The imitators would start from a lower value and then the number would rise steeply and assume a bell shape curve with a sharp peak. The time required to reach this peak would depend on the utility of the technology and its cost.

As seen in the graph, the curve for imitators would rise sharply and then fall at the same rate and would prolong for some time beyond the innovator curve. After a certain point of time, the technology would have become either widespread or may have become obsolete or new and better products and technology may have come up.

It must be noted that the tool should be used only for product categories such as CD players, computers or any other product categories. It should not be used to find the market share of product manufacturers such as Sony, Samsung, Philips and others.

Technology acceptance model – TAM

Technology Acceptance Model – TAM was developed by Davis (1989) and Bagozzi (1992) and that deals with how different users would be willing to accept an information systems application and use it. When users are faced with a new technology, they are faced with two main factors that can act as dilemmas or drivers and influence the acceptance and use. The two factors are perceived usefulness – PU and the perceived ease of use – PEOU. Perceived usefulness is the extent to which a user perceives that the information system application would help him in his job or for a particular task while the perceived ease of use refers to the extent by which the user feels that the system would have reduced effort. TAM has given rise to a wide branch of technology and psychology such as usability engineering, usability metrics and others. With the current increase in the number of applications in information systems, TAM has acquired a new meaning.

For ICT to be successful, it has to be acceptable to people, it must help people to fulfill a need and it must be easy to use and TAM helps developers to develop a better understanding of the application they develop. TAM was based on theory of reasoned action and while TRA was based on the measure of attitude, TAM is built on the principles of usefulness and ease of use. There are two types of users, voluntary users and forced users. Voluntary users are the public that can decide if they want to use a particular technology and they have alternative technologies available while forced users are employees that are forced to use computer applications and who do not have a choice since the work profile demands it. Researchers have realized that to make technology useful; forced users must also voluntarily accept a technology else productivity falls. Computer applications are introduced to ease the burden on employees and to increase productivity, reduce throughput times and have a quicker turnaround. But if the technology is cumbersome, not user friendly and employees spend considerable time in figuring out how it works, then the main intent of using the technology is missed out. Following figure gives an illustration of the TAM model.

As seen in the above illustration, there are TAM can be effectively used to assess and predict user acceptance of ERP and SOA applications and find widespread use in the industry. IT applications may cost thousands of dollars and while the system may function very well from the developer’s perspective, the common user would think otherwise. While training is given on how to use the system, the training would normally be brief sessions that inform users about various features of the product and how they need to use the application to complete their tasks.

TAM is used to determine the linking between external variables and the acceptance of users who would be using the application in the workplace. The external variables would create an external stimulus that would create cognitive responses and influence the perceived ease of use and the perceived usefulness. These influences would in turn frame the intention that would form the behavioral intention and lead to the behavior. The behavior would in turn lead to the actual usage. If any of the causal links were to be disrupted or broken, the actual usage would ultimately suffer and the net result would be that the user would not be ready to accept the product as it would not be useful.

Davis (1995) has pointed out that that the scales used to measure TAM are regarded as valid and reliable and the results replicate for varied technologies and applications They function better since physical groups are formed during the constructs and this allows for better explanation of acceptance of users. The author suggests that external influences include system design, training users to understand the software, self efficacy in using IT systems, involvement of users in design of the system and how ICT is introduced plays an important role in increasing user acceptance. The model would assume that users are literate or have some knowledge of computers and are aware of basic functionalities in IT systems.

As reported by the author during the research, the intention to use is the crucial factor that would lead to use of the system. As per TAR, there is a basic fear of new technologies and in such cases; the user would have misgivings and fear about the system. When computers first made inroads into the public domain, an overwhelming number of people had misgivings and feelings that ‘I would be blamed if something happens and this factor acts as a barrier to the intention to use and the actual behavior.

However, this is a psychological case and it would be wrong to apply TAM to such events. Davies (1989) points out that in work organizations, people would use the application even if they did not find it particularly useful. But the intention to use is very important and this is formed by the attitude that the user has towards the system. The attitude is formed by the perceived usefulness and the extent to which the user feels that the system would reduce his efforts in completing the tasks. Keeping this in mind, Davies framed another model for TAM and this is illustrated in the following figure.

As seen in the above figure, there is a new construct called Attitude towards Using ‘A’ and these are framed by the two factors of perceived usefulness and perceived ease of use. The factor of Attitude towards using would in turn lead to the behavioral intention to use BI. It may very well happen that users may directly move from U to BI and then lead to the actual system use. The above model received a fair share of support and criticisms as there were doubts raised about the heuristic correctness of the TAM model. Keeping the review comments in view, Venkatesh (2000) proposed the TAM 2 model and this is illustrated in the following figure.

TAM 2 adds certain social influences to the perceived usefulness of the TAM model to increase the predictability of the model. Influences that are added include the experience, voluntariness, subjective norm, image, job relevance, output quality and the result demonstrability. These social forces help to act on users who would be using the system and give the choice of rejecting or adopting the system. As suggested by Fishbein (1975), subjective norm is the users perception of important people who would influence his decision on using the system.

Users may decide to carry out behavior even though they may not like the behavior or like the consequences but they do it if people who are important to them motivate their actions. This is often called peer pressure. The author hypnotized that only when it is mandatory would the subjective norm have a positive influence on the intent to use else it would not have any significant use. The hypothesis is relevant in work organizations were people have to use a system, whether they like it or not.

Voluntariness is the amount to which potential technology users and adopters view the decision of adoption as non-mandatory. This factor would come into force when users feel that the socially influencing person can give rewards in case of compliance or punishment in case of non-compliance. The author suggests “voluntariness will moderate the effect of subjective norm on intention to use”. Venkatesh (2000) argues that users are susceptible to normative social influences and attempt to maintain a favorable image in their social group. Image could be depicted as the extent to which a persons status in a social circle is enhanced by using a particular technology. If others in a social group regard a technology with esteem then the user would feel that by using and adopting the technology, his standing would rise.

The author has hypotheses that subjective norm would have a positive effect on the image and in turn, the image of factor would have a positive effect on the perceived usefulness. Thus, one would assume that high technology products would have a positive image associated with them since people have a high regard for them.

Unified Theory of Acceptance and Use of Technology (UTAUT)

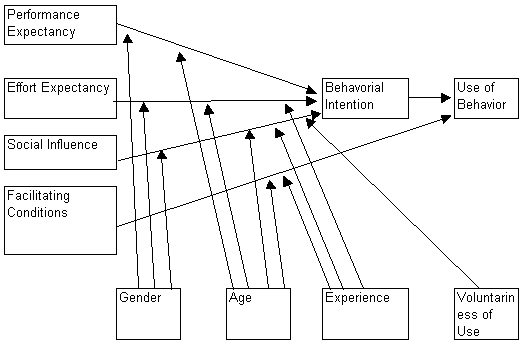

Venkatesh (2003) speaks of the Unified Theory of Acceptance and Use of Technology model – UTAUT and this is a representation of the eight models of technology adoption. The model is used to explain intentions of the user for using an ICT system and the usage behaviour that would result. The model is based on four constructs and they are effort expectancy, performance expectancy, facilitating conditions and the social influence that a user is subjected to. Building upon TAM and other seven model, UTAUT adds factors such as age and gender of the user along with voluntariness and experience. Field research suggests that the model can account for about 70 percent of the usage variance intention.

As suggested by Venkatesh (2003), seven of the constructs have a major impact and can be regarded as direct determinants of usage or intention in the models. Form these; the author selected four as very important as direct determinant and they are effort expectancy, facilitating conditions, social influence and performance expectancy. The author regarded constructs such as self-efficacy, aptitude for using technology and anxiety as not direct determinants of the usage or intention. There are in addition some important moderators such as age, gender, experience and voluntariness and these would be acting on the core constructs. Based on these inputs, an illustration of the UTAUT model is given in the following figure.

As can be seen in the above figure, there are four core constructs and they are performance expectancy, effort expectancy, social influence and facilitating conditions. These are the determinants for the behavioural intention, which in turn brings out the use of behaviour. Now a moderator that would either enhance or inhibit the construct would act each of the core constructs on. Performance expectancy would be acted on by the gender and age moderators while effort expectancy would be acted on by gender and age. Social influence would be acted on by the gender, age, experience and the voluntariness of use while facilitating conditions would be acted on by age and experience.

Among the core constructs, performance expectancy is the strongest predictor for each model. It is the degree to which a user would believe that by using the system, certain job related tasks and jobs would be completed. Effort expectancy is the amount of ease that would be linked to using the system and this construct is moderated by gender, age and experience. So the implication is that people of certain gender and age who have a certain level of experience would either have a high or low value for the effort expectancy.

The next core construct is the social influence and it is the amount by which a user would feel that people who are important to him would require him to use the system. This is in turn moderated by gender, age, experience and the voluntariness of use. The last construct is the facilitating conditions and it is the amount by which a user would believe that there is support from the organization that would help him to use the system.

Methodology

The term Methodology refers to the approach taken for the research process, from the theoretical framework, hypothesis to gathering and analyzing of data. The term method refers to the various means by which data can be collected and analyzed. The methodological assumption is concerned with the process of the research, from the theoretical underpinning to the collection and analysis of the data (Silverman, 2001).

Qualitative and Quantitative Research

Studies that use data cover areas of economic study, unemployment, health of the economy, scientific study, patterns of demography and others. Different type of data is collected using methods such as databases, reliable government studies, secondary research published in peer reviewed journals, experiments, observations, interviews and others. Data that is collected can be designated into two basic categories, quantitative and qualitative. This also formulates what type of research a study will be conducting: quantitative or qualitative.

Denzin (2000) described quantitative research as “the research which gathers data that is measurable in some way and which is usually analyzed statistically”. This type of data is mainly concerned with how much there is of something, how fast things are done, and so on. The data collected in this instance is always in the form of numbers. To obtain quantitative data, one should have a specific framework about what has to be researched, what should be known, types of inputs that are admissible and so on. Such an approach can help in designing the questionnaire, making observations and so on. Denzin also defined qualitative research as “the research that gathers data that provides a detailed description of whatever is being researched”. Both types of research have their supporters and detractors and while some claim that quantitative research is much more scientific, others argue that qualitative research is required to examine a specific issue in depth.

Researchers who support that quantitative research argue that numerical data can be statistically analyzed and in this way, it can be established whether it is valid, reliable and whether it can be generalized. By using numerical data, these numbers can be used to compare between other studies, which also use the same numbers, the same scales, etc. With qualitative research, it is not so easily possible to achieve this result, as no specific method or scale of measurement is kept. This is the main disadvantage of qualitative research, as findings cannot be generalized to larger populations with a large degree of certainty and validity. The reason that this happens is because their findings are not tested and evaluated statistically to establish whether they are due to chance or whether they are statistically significant and to what extent.

Another advantage of quantitative to qualitative research is that qualitative research is descriptive and many times subjective too, as it depends on the researcher’s perspective or how the research registers certain behaviors. Another researcher conducting the same study may observe the qualitative data, which is given in a completely different way. Quantitative research does not show this disadvantage as all the data is in the form of numbers and, therefore, it may be translated in only one possible way, that which is given from the objective value of each specific number. However, qualitative research has many advantages to offer too, which are not offered through quantitative research. It is usually through such type of research that a rich, in-depth insight can be given into an individual or a group, by being far more detailed and by recognizing the uniqueness of each individual. This type of research realizes the importance of the subjective feelings of those who are studied.

Qualitative research analysis does not have to fall into the pitfall of being ‘forced’ to have all its values into certain numerical categories. Not all phenomena can always be adequately assigned a numerical value, and when this does happen, they lose much of their naturalistic reality. Qualitative research can simply describe a data for what it is without having to assign it to a number. Qualitative research can give attention to occurrences, which are not so common. For example, it is very difficult to find enough participants to conduct statistical correlations between nations on women being more accident prone and indulging in rash driving because women will not be willing to be used for such studies. In such cases, quantitative research is impossible and it is only through qualitative research that such cases can be examined in depth and conclude to specific findings and results (Byrne, 2002).

Data Gathering

Gathering data is a very important phase and due consideration must be given for the time frame of the research.

Single and Multiple Methods

It is not possible to recommend a single data collection method for each project since each project would have different requirements. In such cases, the use of multiple methods is essential. Multiple methods by using survey instruments, review of documents to understand the project is recommended as it gives a better overview of the data. Such methods also highlight the errors between different methods and the occurrence of bias by a specific method is reduced. In some cases, the use of multiple methods is possible when the project requires large analysis spread across multiple sites. Also, multiple resources require more manpower and resources and these are usually available for larger projects (Denzin, 2000).

Sample Selection

The sample to be researched largely determines the data collection method that is used. Surveys are better suited when used to obtain information from participants, while focus groups would require a different method since the groups are diverse. The sample size would also depend on the project requirements and the group that has to be studied. While considering large number of subjects is best since the results are more reliable, the costs of studying such large samples increase. If the project has sufficient budget allocations, then it is possible to include larger samples and members in the study (Byrne, 2002).

Cost Considerations

Cost is an important aspect for research projects and choosing the method for data collection depends on the budget. For tasks such as running observations, program and project document review can be achieved with lesser costs, but tasks such as the design of the survey instruments, administering the instrument to subjects and analyzing the results would need the help of an external evaluator. In some cases, staff would have to be sent for training. When standard tests and analysis are to be used, some external staff and experts may have to be involved. For storing and archival of data, software would have to be used so that the data can be analyzed as required. Since project budgets tend to be smaller in the initial stages, effort should be spent in creating some data collection instruments and tools to fulfill future requirements as the program evolves and moves across different phases (Byrne, 2002).

Sample Size

The sample size used in research has always created disagreements and controversies. Various issues such as ethical issues and statistical problems arise and these need to be addressed properly. When very large sample data sizes are used, the ethical issue of wasting resources will arise, while selecting a smaller size will create another ethical issue. When the research objective is large, then a statistically significant difference may be observed even with a smaller sample.

However, the statistically significant difference may happen when a smaller sample size has been used and such differences do emerge and also when there is no difference. Freiman (1970) reported that a study on clinical trials that showed negative results for certain parameters for the effectiveness of a treatment; but after the results were further examined it was found that because of the small sample size, 50% of the results and method used were not adequate to cover 70% of the improvements. Many researchers when faced with shortage of resources or when they find that bigger sample size is not available or would take too much time tend to use smaller samples in the hope that the size is representative of a wider section of the data. However, in many cases, this is misleading and researchers would be held responsible of major errors that were caused due to ignorance rather than due to misconduct. In research, ignorance does not lead to a researcher being free of misrepresentation charges and such practices cannot be excused (Freiman, 1970).

Describing Data

While gathering data is one part of the research, interpreting data is very important. Different classifications are used to identify data. Variable: A variable is an item of data and some examples include quantities such as gender, test scores, and weight. The values of these quantities vary from one observation to another. Types and classifications are: Qualitative-Non-Numerical quality; Quantitative-Numerical; Discrete-counts and Continuous measures (Silverman, 2001).

Qualitative Data: This data describes the quality of something in a non-numerical format. Counts can be applied to qualitative data, but one cannot order or measure this type of variable. Examples are gender, marital status, geographical region of an organization, job title, etc. (Silverman, 2001).

Qualitative data is usually treated as Categorical Data. With categorical data, the observations can be sorted according into non-overlapping categories or by characteristics. As an example, apparel can be categorized as per their color. The parameter of ‘colour’ would have certain non-overlapping properties such as red, green, orange, etc. People can be categorized as per their gender with features such as male and female. While selecting categories, care should be taken to frame them properly and a value from one set of data should belong to only one type of category and not be able to get into multiple categories. Analysis of qualitative data is done by using: Frequency tables, Modes – most frequently occurring and Graphs- Bar Charts and Pie Charts (Silverman, 2001).

Quantitative Data: Quantitative or numerical data arise when the observations are frequencies or measurements. The data are said to be discrete if the measurements are integers, e.g. number of employees of a company, number of incorrect answers on a test, number of participants in a program. The data are said to be continuous if the measurements can take on any value, usually within some range (e.g. weight). Age and income are continuous quantitative variables. For continuous variables, arithmetic operations such as differences and averages make sense. Analysis can take almost any form such as creating groups or categories and generating frequency tables and all descriptive statistics can be applied. Effective graphs include Histograms, stem-and-Leaf plots, Dot Plots, Box plots, and XY Scatter Plots with 2 or more variables.

Some quantitative variables can be treated only as ranks; they have a natural order, but these values are not strictly measured. Examples are: age group (taking the values child, teen, adult, senior), and Likert Scale data (responses such as strongly agree, agree, neutral, disagree, strongly disagree). For these variables, the differences between contiguous points on the scale need not be the same, and the ratio of values is not meaningful. Analyses using: Frequency tables, Mode, Median, Quartiles and Graphs Bar Charts, Dot Plots, Pie Charts, and Line Charts with 2 or more variables (Silverman, 2001).

Research Paradigm and Method

Typically, the research design, methodology and approach are driven by the research question being scrutinized. Galliers (1991) infers that depending on the field of research that there may be several research approaches and methods that are considered appropriate; this is a view which is also shared by Vitalari (1985). The research paradigm will influence the selection of an appropriate research method and approach by the researcher of which they could choose either qualitative or quantitative research, in some cases, as stated by Creswell, (2003) mixed method procedures which incorporate both elements of qualitative and quantitative research are obtaining a level of validity within academia where it has aspired to a level of legitimacy within the social and human sciences.

Research Paradigm

The paradigm adopted in any research has important implications for methodology decisions. Orlikowski (1991) identify that there are three paradigms evident in Information Systems research which are the: Positivist paradigm; Interpretivist paradigm and Critical theory paradigm.

The Positivist Paradigm

The ‘Positivist Paradigm Positivism’, as stated in Neumann (1994), sees social sciences as an: “Organized method for combining deductive logic with precise empirical observations of individual behavior to discover and confirm a set of probabilistic causal laws that can be used to predict general patterns of human activity”. The above objective can be achieved by searching for regularities and causal relationships between fundamental elements. There are two major approaches in research, these being scientific and Interpretivist. The scientific approach is based on empirical study, which corresponds with the intransigent nature of positivism. Positivism has been the dominant paradigm of Information Systems research. Orlikowski (1991) stated that 97 percent of the academic research which was conducted within USA corresponded to the positivism paradigm. More recently, Interpretivism has gained wider acceptance and critical theory has been discussed and used. While paradigms have imprecise boundaries and include numerous variations, common themes for each paradigm can be identified.

Firstly, positivist research is based mainly on deductive style of reasoning, as used in natural science. In other words, it is based on the belief that the description of the world’s phenomena is reducible to observable facts and mathematical relationships. The positivist paradigm focuses on numerically measurable events and scientific study. Such research is often concerned with hypothesis testing and is used to discover natural laws so that people can predict and control events. Fact and evidence are two words primarily associated with the positivist paradigm. The positivist paradigm utilizes quantitative data, often using large samples where data is collected through experiments, questionnaires, content analysis and existing statistics. While the accuracy and high reliability of a positivist approach are clear, criticism concerning the depth of understanding gained.

Arguments against positivism and in support of the Interpretivist paradigm are based on quantitative methods producing artificial and sterile results. These results are argued to be incapable of representing the complexity of social realities. People are reduced to numbers and abstract laws and formulas are arguably not relevant to the actual lives of real people and have low validity (Neumann, 1994).

The Interpretivist Paradigm

Interpretivism, as defined by Neumann (1994) is the “systematic analysis-of socially meaningful action through the direct detailed observation of people in natural settings to arrive at understandings and interpretations of how people create and maintain their social worlds.” Interpretivism is related with the theory of hermeneutics, which emphasizes detailed examination and assessment of text, which could refer to written words. This paradigm is more established in Information Systems research in Europe compared to the United States of America. In contrast to positivism, the Interpretivist paradigm is particularly concerned with qualitative data. This data is rich and can be examined for social meaning. The qualitative approaches take the stance that information about the world’s phenomena when reduced to numerical form, loses most of the important information and meaning. In other words, Interpretivism does not try to generalize from a carefully selected sample to a specified population but rather to develop deep understanding which may then inform understanding in other contexts.

Methodologically, research within the Interpretivist paradigm uses small samples, open ended questions, unstructured interviews, individual case studies, diary methods, participant observation and the like. Research using these techniques has high construct validity and realism, however is more suited to theory generation. As with the positivist paradigm, the Interpretivist approach, however, possesses weaknesses. It is difficult to replicate interpretivistic work because the data and findings are socially constructed between the respondents and researcher/s. Positivist criteria of validity and reliability cannot be easily applied. Rather truth and trustworthiness are used as criteria and are observed through different means.

The Critical Theory Paradigm

Critical theory is derived from the works of Marx, Freud, Marcuse and Habermas (Neumann, 1994). Critical theorists disagree with what is viewed as the anti-humanist and conservative values of positivism and the passive subjectivism of Interpretivism. Critical theorists go beyond seeking understanding of an existing reality and critically evaluate the social reality being studied to implement improvements to it. Research may result in strategies to reveal contradictions, empower subjects and initiate action. Critical theory is receiving increased attention from Information Systems researchers.

This study is based in the positivist paradigm. The research asks target respondents questions in a written questionnaire to collect objective statistical data. In terms of data collection, there is no manipulation of the situation, with respondents answering numerous questions in a short period (Neumann, 1994). The data when obtained is expected to be precise with high reliability, so that when measures are repeated, the findings have comparable results. Despite its shortcomings, the positivist approach is well matched to the objectives of this study.

Firstly, the study is based on hypothesis testing rather than theory development. Secondly, testing diffusion and adoption theories mean that data collection encompasses a broad demographical scope, which again supports the need for structured questionnaires to collect precise data. Techniques which will be used to collect evidence and influence how the evidence will be analyzed. Some of the following approaches are predominantly positivist while some may be used with either phenomenological approach.

Research Methods of the Positivist Paradigm

Galliers (1992) provides a list of methods or tactics suitable for all types of business and management researchers. It is important for the researcher to be familiar with the characteristics of these approaches, as they will determine the Forecasting Research techniques which will be used to collect evidence and influence how the evidence will be analysed. Some of the following approaches are predominantly positivist while some may be used with either phenomenological approach.

Forecasting Research

Forecasting research tends to be associated with mathematical and statistical techniques of regression and time series analysis (Collopy and Armstrong, 1992). This type of research may also be regarded as falling under the heading of mathematical simulation. These techniques allow projections to be made based on past or historic evidence. This is usually a highly quantitative approach in which mathematical models are fitted to empirical data or evidence points. This research method was not chosen as it attempts to establish relationships between different sets of historical evidence and to understand why these relationships exist.

Futures Research